Brief #9 – Evaluating Student Progress and Outcomes

Intervention Simplified

Evaluating student progress and outcomes

Of a classwide SEL intervention program

Stephen N. Elliott, PhD

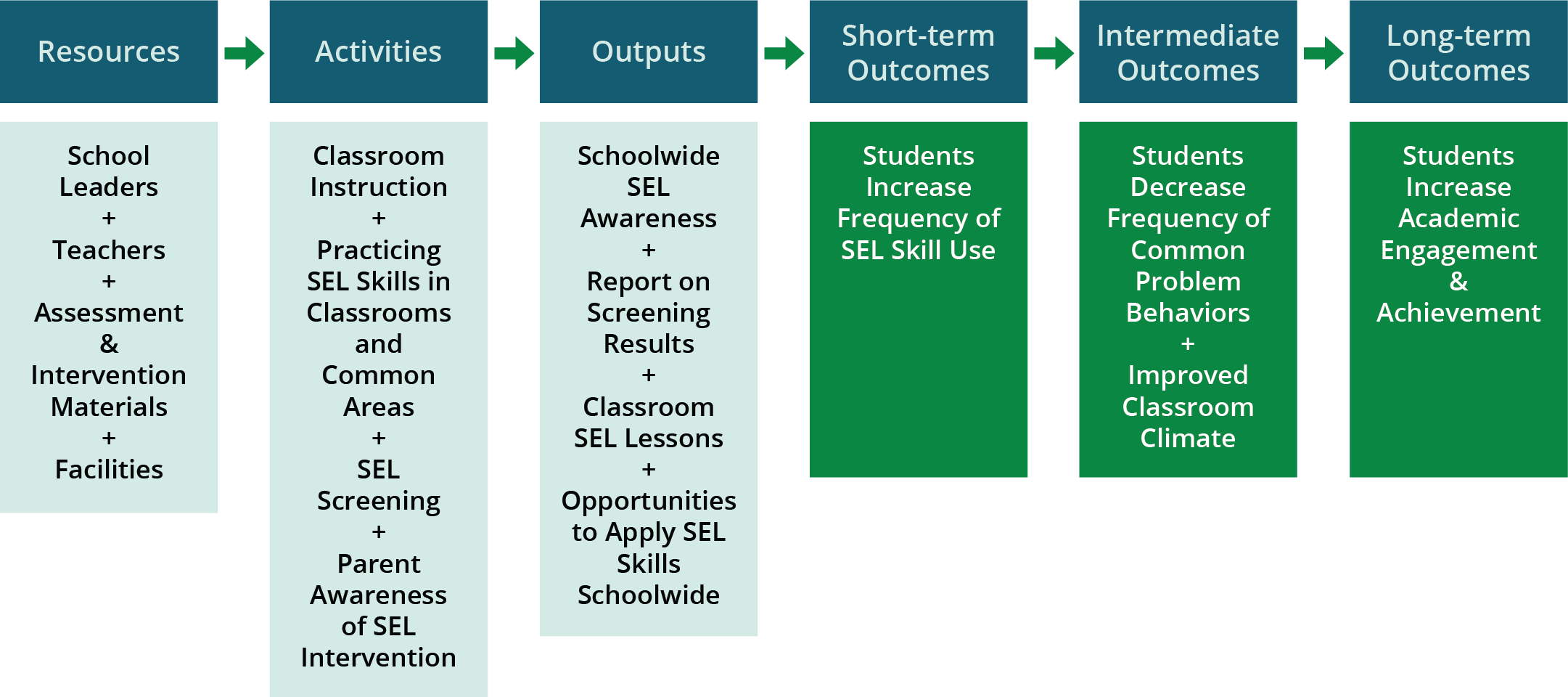

When a new educational program is implemented for students, it is expected to change students’ behavior and/or improve their academic performance in measureable ways. In the case of social−emotional learning (SEL) interventions, many researchers and policy leaders expect effective interventions to have several positive effects on students. Such expectations are based on a theory of change (like that illustrated below) with rigorous evidence to support it.

For example, DiPerna and colleagues (2015, 2016, 2017), in a randomized control trial in six elementary schools funded by the U.S. Department of Education, provided strong evidence to support the SSIS SEL Edition Classwide Intervention Program’s (CIP; Elliott & Gresham, 2017a) six-phase program. Specifically, these researchers reported that using the six-phase process resulted in students (1) learning social−emotional skills and performing these desired behaviors more frequently, (2) concurrently reducing the frequency of many common externalizing problem behaviors, and (3) increasing academic engagement and in some cases achievement test scores.

The Collaborative for Academic, Social, and Emotional Learning (CASEL) published a guide to SEL programs in 2012 with these expectations: more frequent positive social behavior, fewer conduct problems, less emotional stress, and more academic success. Many current SEL researchers and policy leaders support the expectation that effective SEL programs have a triple positive impact on students.

Sound accountability and ethical practice, however, dictate that educators support their theories of change with actual evidence that such programs work as intended rather than simply accept testimonies or research reports based on different students in different schools. Many educators struggle to evaluate SEL intervention programs for at least three reasons. First, the use of control or comparison groups is often not desirable in such evaluation efforts. Second, the repeated assessment of many students to document changes from a program is time consuming and can be expensive. Third, most existing assessments available to measure intervention effectiveness are poorly aligned with the skills being taught in SEL programs. These challenges, however, can be overcome and often result in program improvements that help sustain an SEL program.

In this brief, we examine a systematic procedure for evaluating an SEL program and how to support it with technically sound assessments. Program evaluation is complex and has been discussed in entire books and professional standards (e.g., U.S. Department of Education, 2014; Yarbrough, Shulha, Hopson, & Caruthers, 2011). Thus, consider this brief a starting point for planning an evaluation of an SEL intervention program and selecting assessment tools to measure and document the expected outcomes.

Planning for and documenting expected changes in students’ behavior

Before collecting data for your evaluation, you must decide on an overall evaluation design and select assessments that measure the behaviors and skills targeted for change. If your intervention is expected to have an effect on students’ social−emotional skills, problem behaviors, and academic achievement, you will need assessments for each of these areas. In addition, these assessments should be able to be administered at least twice—once at the beginning of the program to establish a baseline and again after the completion of the program to document any change that occurred from the beginning of the program. Ideally, for comparison purposes, it is best to have a control group of very similar students in a very similar school who are not receiving the intervention program at the same time. Such a comparison would allow you to determine the potential added value of the intervention program over typical educational practices and student growth. When a comparison or control group is not feasible, you can use a pre-intervention to post-intervention design to document the direction and magnitude of any changes in students’ behavior.

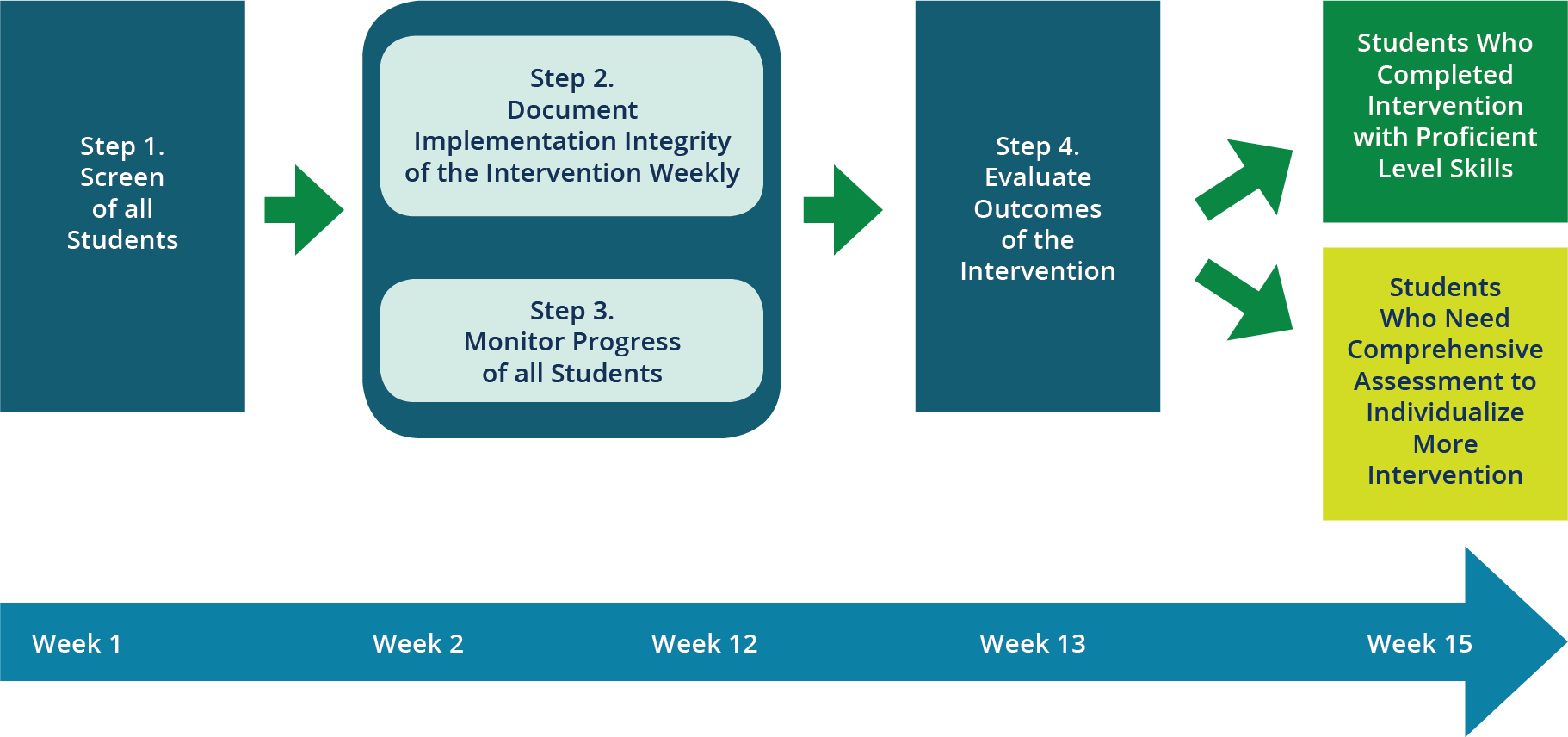

The intervention evaluation process

A basic evaluation of an SEL intervention program should answer three questions: Was the intervention implemented as designed? Did the intervention have the desired effect on students? Were there any negative unintentional effects for students or users of the intervention? To collect and synthesize the evidence needed to answer these questions, follow the four-step process illustrated here. (Note: the amount of time needed for this process is a function of the length of the intervention program; some programs are significantly longer than illustrated here.)

Tools for assessing students’ social and academic skills

The SSIS SEL Edition CIP and assessment components (Elliott & Gresham, 2017b; Gresham & Elliott, 2017) were expressly designed for use in a multi-tiered support system to capture the status and growth of students’ social−emotional skills and related problem and academic behaviors. The SSIS SEL Edition assessments measure skills representative of the social−emotional competencies of selfawareness, self-management, social awareness, relationship skills, and responsible decision making (CASEL, 2012). Specifically, the SSIS SEL Edition consists of three coordinated and content-aligned components designed to measure each of the social−emotional and academic competencies.

• SSIS SEL Edition Screening/Progress Monitoring Scales: This brief (30 to 40 minutes), class-based, criterion-referenced set of rating rubrics is used to assess student-based strengths and improvement areas across five SEL and three academic competencies. This assessment is completed by teachers to identify students’ levels of SEL and academic functioning and can also be used to evaluate student progress in and outcomes of skill development programs that teach the CASEL SEL competencies.

• SSIS SEL Edition Teacher, Parent, and Student Forms: These norm-referenced rating scales can be completed in 10 minutes or less by a teacher, parent, or student, and provide different viewpoints on the social–emotional functioning of individual students ages 3 to 18 years. The Teacher Form consists of 58 items and is used to generate scores for each of the five SEL competencies, as well as a score for academic competence. The Parent Form (51 items) and the Student Form (46 items) are used to generate scores for the five SEL competencies and are available in English and Spanish.

• SSIS SEL Edition Intervention Integrity and Outcome Evaluation Report: This teacher- or observer-completed form is used as a formative measure to document the degree to which an intervention skill unit was implemented as planned. For each skill unit and instructional step (i.e., Tell, Show, Do, Practice, Monitor Progress, and Generalize), users record an implementation rating (2 = fully, 1 = partially, 0 = not at all). These ratings are summed to yield a total implementation percentage with the goal of > 80% for a high intervention integrity. Concurrently, users also record evaluative judgments regarding the outcome of the intervention units using a 4-point scale (4 = highly effective, 3 = moderately effective, 2 = minimally effective, 1 = not effective). Users complete both the integrity and effectiveness ratings after teaching a skill lesson.

Documenting and interpreting change resulting from interventions

The SSIS SEL Edition Screening/Progress Monitoring Scales and the Rating Forms can be administered and scored digitally or they can be administered via paper and scored digitally. The reports identify relative strengths and weaknesses in social–emotional and academic functioning at individual and group levels. Reports for the Screening/Progress Monitoring Scales also are available via Review360® and can facilitate data management for an evaluation of students at two points in time: pre-intervention (Time 1) and post-intervention (Time 2). All reports are organized to address six questions: (1) How many students were screened at Time 1 and Time 2? (2) What percentages of these students were identified at each level of social−emotional competence at Time 1 and Time 2? (3) What percentages of these students were identified at each level of academic competence at Time 1 and Time 2? (4) Based on the screening for the entire group, which areas of social−emotional competence are relative strengths and what areas need more development at Time 1 and Time 2? (5) Based on the screening for the entire group, which areas of academic functioning are relative strengths and what areas need more development at Time 1 and Time 2? (6) Given the assessment results, which social−emotional skills are priorities for intervention at Time 1 and Time 2?

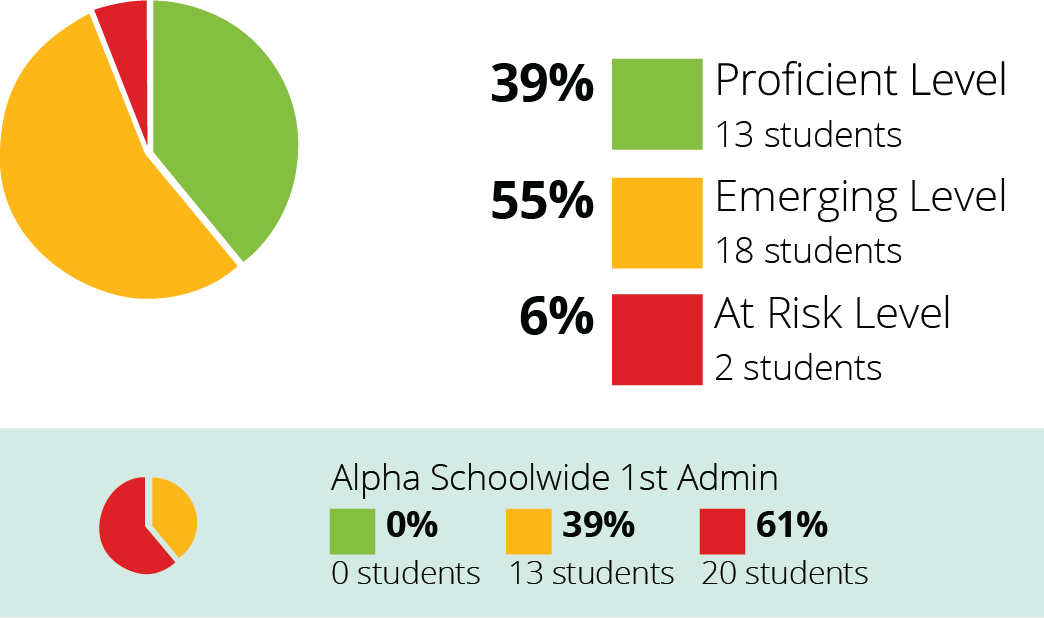

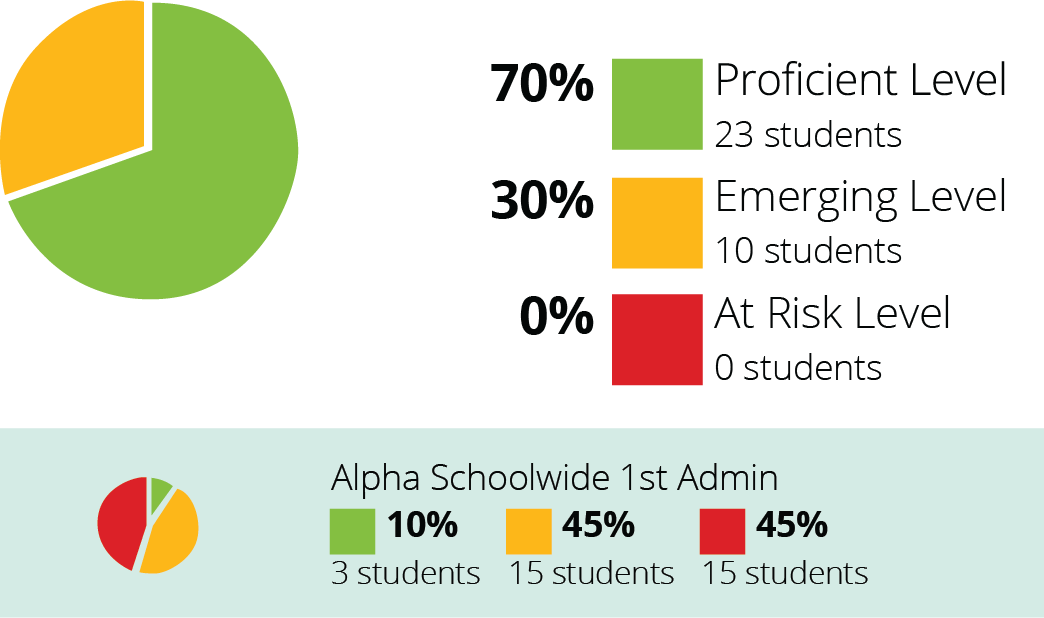

The reports include illustrations to facilitate intervention evaluation decisions. Specifically, as shown in the upper set of pie charts forsocial−emotional competencies, the assessment results at Time 1 indicated 61% of students were at the At-Risk Level and 0% were at the Proficient Level, whereas at Time 2 the percentage of students shifted to 6% at the At-Risk Level and 39% at the Proficient Level. The lower set of pie charts illustrates similar types of changes for the students’ academic competence.

What percentages of these students were identified at each level of social-emotional competence?

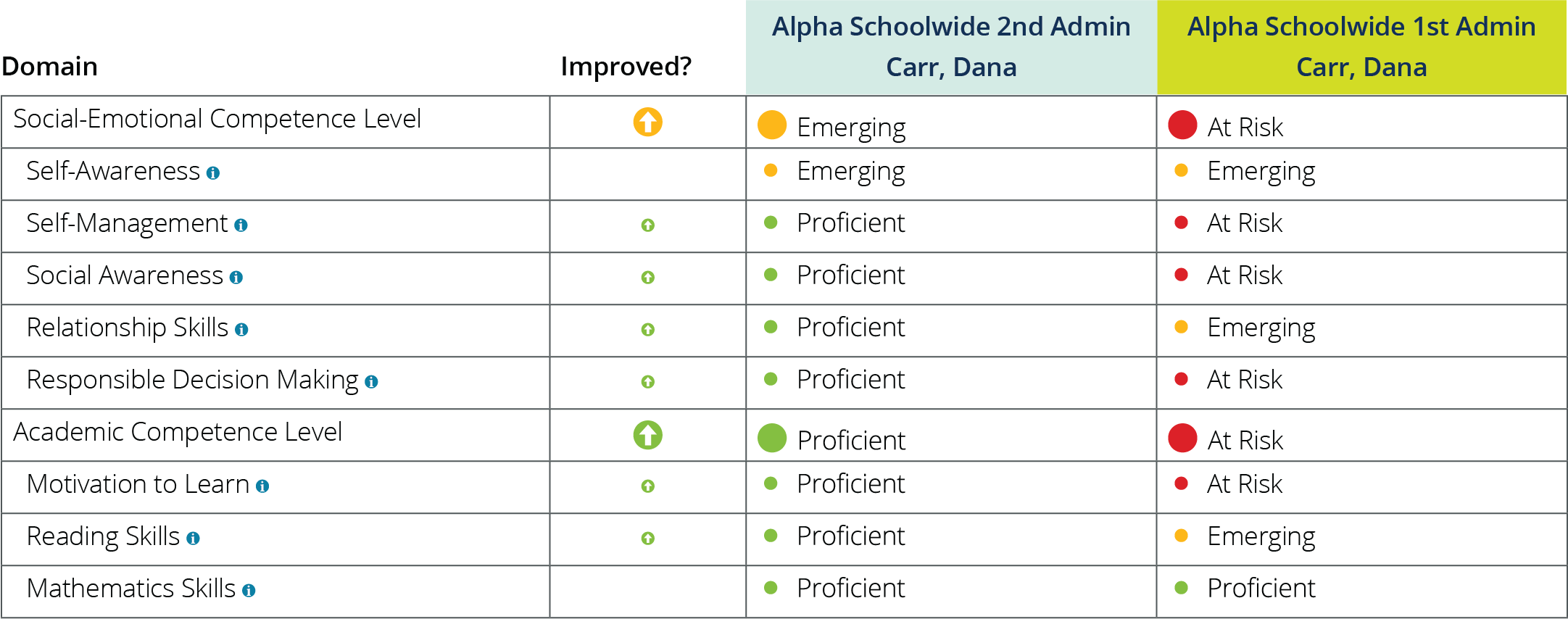

In addition to group reports, users can examine the changes in social−emotional and academic skills for an individual student who has completed a multi-week intervention program. Another portion of the same Review360 report (shown below) compares assessment results for Dana Carr at Time 1 (right-hand side of figure) and at Time 2. The colorized and labeled levels of functioning indicate that meaningful changes occurred for four of five SEL competencies and two of three academic areas.

How does this compare to screenings done in other screening periods? Is the student improving?

Conclusions

Evaluating a new SEL intervention program is important and challenging, but achievable with the right tools and a design that allows for pre- and post-intervention assessments of students’ social−emotional skills, problem behaviors, and academic competence. This brief supports a four-step process and the use of SSIS SEL Edition assessments with Review360 scoring and reporting software as a means of evaluating the effects of an SEL program.

References

Collaborative for Academic, Social, and Emotional Learning. (2012). Effective social and emotional learning programs. Retrieved from https://casel.org/wp-content/uploads/2016/01/2013-casel-guide-1.pdf

DiPerna, J. C., Lei, P., Bellinger, J., & Cheng, W. (2015). Efficacy of the Social Skills Improvement System Classwide Intervention Program (SSIS-CIP) primary version. School Psychology Quarterly, 30(1), 123–141. doi:10.1037/spq0000079

DiPerna, J. C., Lei, P., Bellinger, J., & Cheng, W. (2016). Effects of a universal positive classroom behavior program on student learning Psychology in the Schools, 53(2), 189–203. doi:10.1002/pits.21891

DiPerna, J. C., Lei, P., Cheng, W., Hart, S. C., & Bellinger, J. (2017). A cluster randomized trial of the Social Skills Improvement System-Classwide Intervention Program (SSIS-CIP) in first grade. Journal of Educational Psychology, 110(1), 1–16. doi:10.1037/edu0000191

Elliott, S. N., & Gresham, F. M. (2007). Social Skills Improvement System Classwide Intervention Program teacher’s guide. Bloomington, MN: NCS Pearson.

Elliott, S. N., & Gresham, F.M. (2017a). SSIS SEL Edition Classwide Intervention Program manual. Bloomington, MN: NCS Pearson.

Gresham, F. M., & Elliott, S. N. (2017b). SSIS SEL Edition rating forms [Measurement instrument]. Bloomington, MN: NCS Pearson.

U.S. Department of Education. (2014). Evaluation matters: Getting the information you need from your evaluation. Washington, DC: Author. Retrieved from: https://www2.ed.gov/about/offices/list/oese/sst/evaluationmatters.pdf

Yarbrough, D. B., Shulha, L. M., Hopson, R. K., and Caruthers, F. A. (2011). The program evaluation standards: A guide for evaluators and evaluation users (3rd ed.). Thousand Oaks, CA: Sage.

Stephen N. Elliott, PhD, is the Mickelson Foundation Professor at Arizona State University and the co-author of the SSIS SEL Edition Assessments and Classwide Intervention Program.

© 2018 Pearson Education, Inc. or its affiliates. All rights reserved. Pearson and SSIS are trademarks, in the US and/or other countries, of Pearson plc. LRNAS15520 EL 9/18